In the context of machine learning, the key to unlocking the potential of revolutionary applications lies in the quality of annotated data. From self-driving cars to medical diagnostics, data annotation plays a pivotal role in shaping the accuracy and efficiency of machine learning models. This blog post aims to guide you through the best practices for annotating data, starting with an introduction to data annotation. The goal is to empower users with the knowledge to annotate data effectively and ensure the production of high-quality labels.

Understanding Machine Learning Paradigms

Before exploring data annotation, it is crucial to understand the distinction of various machine learning paradigms: supervised, unsupervised, and reinforcement learning. While reinforcement learning and unsupervised learning operate without labeled data, supervised learning is prevalent in high-impact applications like spam detection and object recognition. It heavily relies on labeled datasets.

What is Data Annotation?

In machine learning, data annotation is labeling data to show the outcome you want your machine learning model to predict. Data labeling is assigning context or meaning to data so that machine learning algorithms can learn from the labels to achieve the desired result. Once your model is deployed, you want it to recognize those features on its own and make a decision or take some action as a result.

Choosing the right data annotation tool is a critical decision that can significantly impact your machine learning project’s success. The market offers various commercial and open source options, each with its strengths and considerations. Before making a choice, it is required to carefully assess the project’s needs, dataset size, and specific requirements.

The decision to involve humans, rely on automation, or adopt a hybrid approach for annotating data depends on dataset size, complexity, and domain specificity. Automated data labeling is suitable for large, well-defined datasets but may struggle with edge cases. Human-only labeling, on the other hand, ensures higher quality but comes with subjectivity and higher costs. Human-in-the-loop (HITL) labeling, a hybrid approach, leverages human expertise and automated tools, striking a balance between accuracy and efficiency.

Best Practices for High-Quality Annotations

Collect the Best Data Possible:

- Ensure high-quality, consistent data collection.

- Integrate data collection into the labeling pipeline for efficiency.

Hire the Right Labelers:

- Choose labelers with domain-specific knowledge.

- Incentivize labelers for high-quality annotations.

Combine Humans and Machines:

- Leverage ML-powered tools with human oversight for accuracy.

Provide Clear Instructions:

- Ensure comprehensive and unambiguous labeling instructions.

Ensuring Precision in Data Annotation:

- The bounding box around the data to be labeled should not include extraneous spaces.

Layers of Review:

- Employ a hierarchical review structure for computer vision tasks.

- Ensure accurate and precise labels.

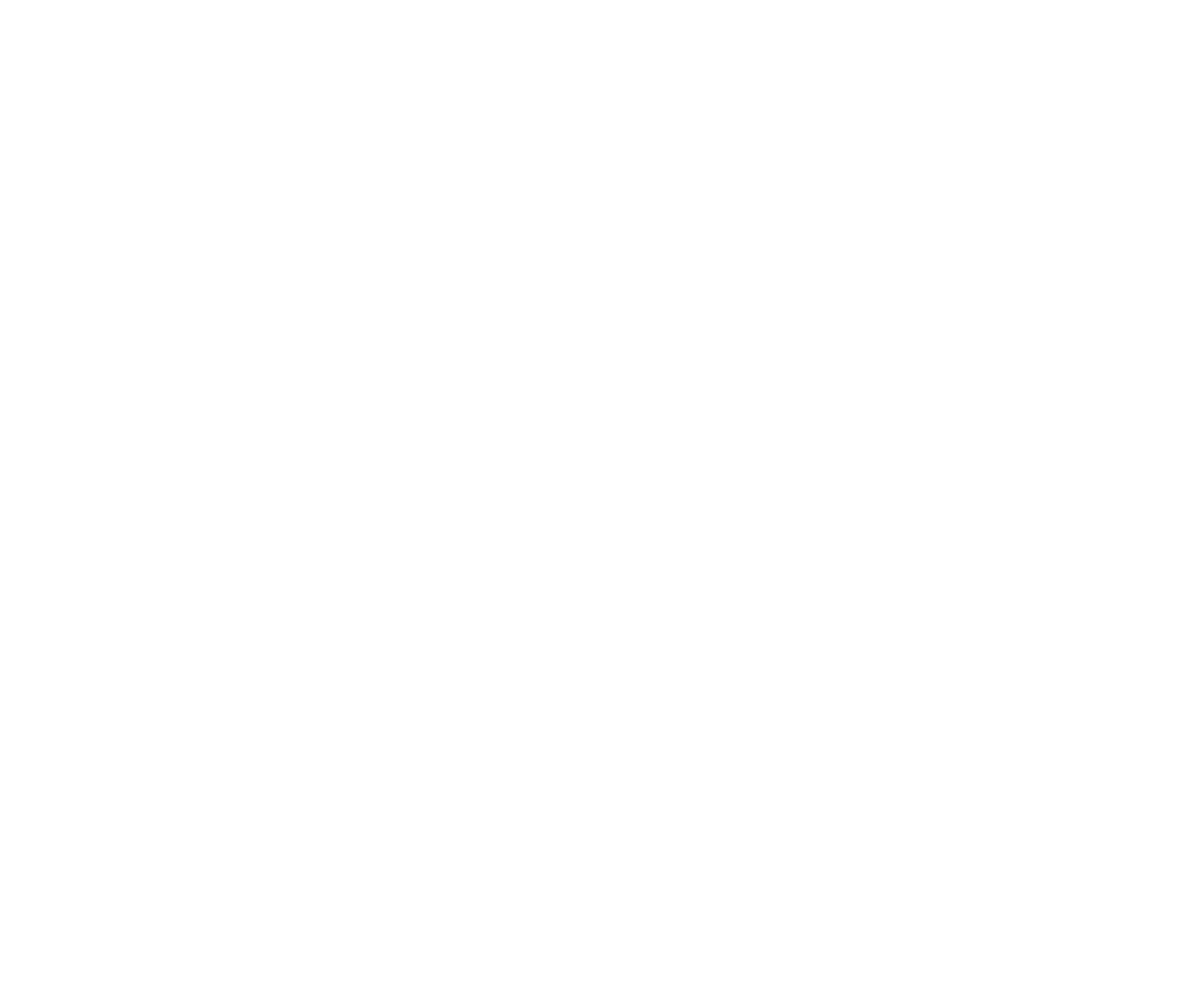

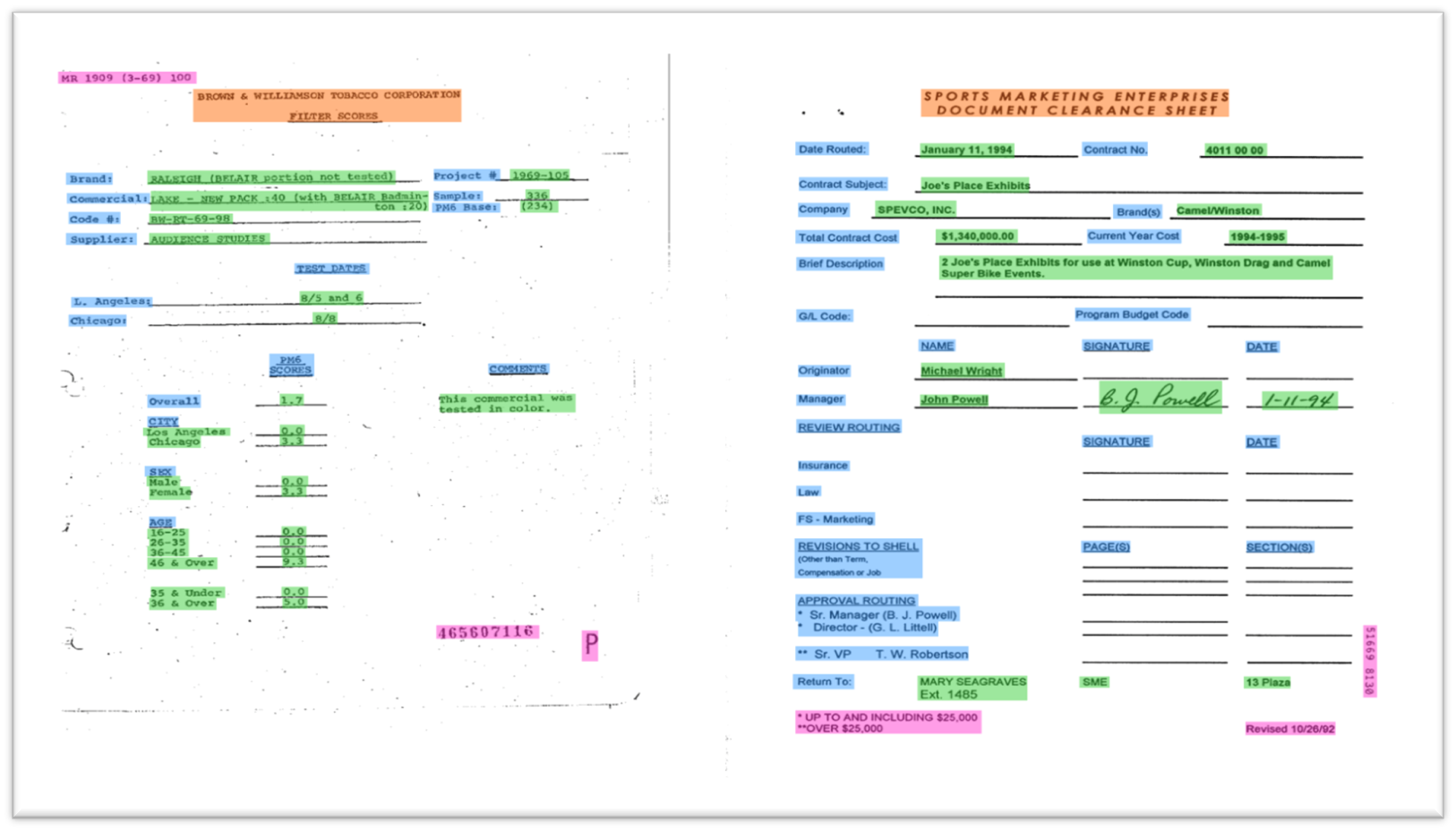

Understanding How to Annotate Data Through a Bank Statement

The development of a bank statement prediction model comprises of three key components: data generation, annotation and model training. Initially, a set of similar data samples is generated to represent the diversity of bank statements. Subsequently, a relevant data annotation tool is employed to create precise labels for each data type within these samples. The annotations include bounding boxes that outline the boundaries of specific data elements. The annotated data is then transformed into a JSON file format which is now referred to as the LayoutLM dataset. It is then given to the LayoutLM model for training. Training the LayoutLM model involves utilizing the FUNSD (Form Understanding in Noisy Scanned Documents) dataset for pre-training. FUNSD, designed for document layout analysis, allows the model to understand spatial relationships and dependencies in complex layouts. During evaluation, the model excels in tasks like information extraction and leveraging insights from pre-training on the FUNSD dataset. The model’s proficiency in understanding text and layout structures contributes to its sturdy performance.

Conclusion

By understanding the annotation approaches, building the right labeling workforce, and selecting appropriate platforms, you can create a robust data pipeline that forms the foundation for the success of your machine learning models. We hope that the above illustration bridges the gap between theory and application, to ensure the production of applications.